As AI continues to reshape software development, code generation models have become essential tools for developers seeking speed, accuracy, and productivity. Among the most talked-about models are DeepSeek-Coder, Code Llama by Meta, and OpenAI’s GPT-4 Turbo. Each offers unique strengths and trade-offs, from open-source accessibility to advanced reasoning capabilities. This article explores how DeepSeek-Coder compares to its leading counterparts, focusing on performance, usability, and real-world application. Whether you’re an individual coder or part of a tech team, understanding these differences can help you choose the right model for your workflow.

Overview of the Models

DeepSeek-Coder

Purpose and Target Users

DeepSeek-Coder is designed for developers, researchers, and engineers looking for a powerful, open-source code generation model. It aims to democratize access to high-performance code models for both academic and commercial use.

Training Data and Architecture

The model is trained on an extensive corpus of public code repositories, documentation, and developer resources. It uses a transformer-based architecture optimized for code-specific tasks such as generation, completion, and debugging.

Supported Languages

DeepSeek-Coder supports multiple programming languages, including Python, JavaScript, C++, Java, and Go, with a strong emphasis on multilingual performance and adaptability.

Code Llama (by Meta)

Variants and Intended Use

Code Llama comes in multiple sizes (e.g., Code Llama 7B, 13B, 34B) to balance resource efficiency and performance. It is geared toward software engineers, educators, and AI researchers looking for scalable solutions to code generation, completion, and understanding.

Special Capabilities

The model features multi-language coding abilities and instruction tuning, making it well-suited for context-aware tasks and educational environments. It excels in generating readable, maintainable code and understanding structured prompts.

GPT-4 Turbo (OpenAI)

Known Strengths in Reasoning and Code Generation

GPT-4 Turbo is one of the most advanced proprietary models, recognized for its high-level reasoning, precision in code generation, and ability to handle complex logic and debugging tasks across languages.

Integration with Tools

The model powers a range of tools, including GitHub Copilot, ChatGPT (with code interpreter), and various APIs. These integrations enhance its utility for real-time collaboration, testing, and full-stack development workflows.

Performance Comparison

Code Completion Accuracy

Benchmark Results

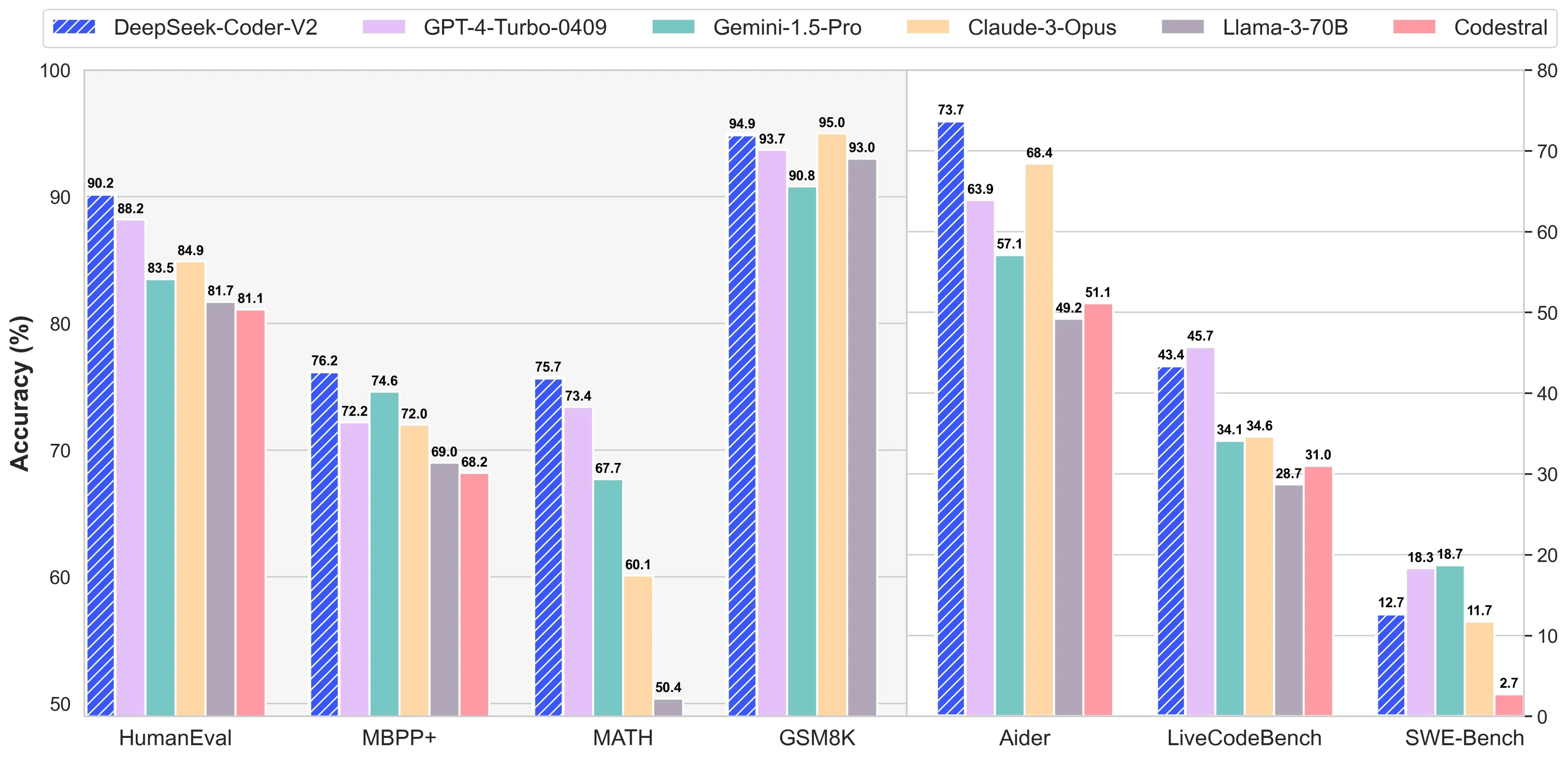

DeepSeek-Coder, Code Llama, and GPT-4 Turbo have all been evaluated using standard benchmarks such as HumanEval, MBPP, and Big-Bench. These tests measure the models’ ability to accurately complete code snippets, solve problems, and follow programming instructions. DeepSeek-Coder performs competitively, particularly in Python and JavaScript, while GPT-4 Turbo often leads in edge-case handling due to its broader training data.

Real-World Code Examples

Beyond benchmarks, real-world performance shows DeepSeek-Coder delivers reliable completions in common development environments. It’s effective at suggesting logic flows, writing boilerplate, and filling in functions—making it suitable for daily software engineering tasks.

Multi-Language Support

Programming Language Coverage

DeepSeek-Coder supports a wide range of languages, including Python, Java, JavaScript, C++, Go, and TypeScript. It holds up well against Code Llama, which is also tuned for multi-language code generation. GPT-4 Turbo, while generalist, excels in interpreting and generating code across even more niche languages due to its extensive dataset.

Performance on Lesser-Known Languages

DeepSeek-Coder is steadily improving in lesser-used languages like Rust and Ruby, but GPT-4 Turbo currently maintains the edge in these scenarios thanks to its more diverse training exposure.

Context Handling

Token Limit and Memory Capabilities

Context length is a critical factor for coding models. DeepSeek-Coder offers up to 16k tokens, allowing it to manage medium-scale codebases and track complex functions. GPT-4 Turbo handles up to 128k tokens, making it ideal for large project files. Code Llama’s token window is more limited, though sufficient for many standard tasks.

Large-Scale Project Handling

When working with multi-file projects or long scripts, DeepSeek-Coder is capable but may require structured prompts. GPT-4 Turbo, on the other hand, maintains coherence over much larger contexts with less user intervention.

Debugging and Code Explanation

Bug Detection and Fixing

DeepSeek-Coder is proficient at identifying syntax errors, logical flaws, and runtime issues when given enough context. Its open-source nature allows for fine-tuning on domain-specific debugging patterns. GPT-4 Turbo remains stronger in multi-step reasoning, often providing not only fixes but rationale behind them.

Explaining Complex Code

DeepSeek-Coder can explain code logic in clear, technical language, especially for common programming patterns. However, GPT-4 Turbo stands out in its ability to break down highly abstract or domain-specific code for educational or onboarding purposes.

Ease of Use and Integration

Platform Availability

DeepSeek-Coder is accessible through platforms like Hugging Face and GitHub, making it easy for developers to download, test, and deploy. While GPT-4 Turbo is primarily accessible through OpenAI’s API and integrated into tools like ChatGPT and Copilot, Code Llama is also available via Hugging Face, supporting open experimentation. DeepSeek’s open access lowers the barrier for developers to get started without needing a subscription or proprietary access.

IDE and Developer Tool Compatibility

DeepSeek-Coder can be integrated into common development environments such as VS Code and Jupyter Notebooks using standard APIs and local model serving. GPT-4 Turbo excels in integration through services like GitHub Copilot, offering seamless in-editor suggestions. Code Llama supports IDE integration via third-party plugins or custom setups, although it may require more configuration compared to GPT-based tools.

Community and Documentation Support

DeepSeek-Coder is relatively new, its documentation is growing, with active contributions on GitHub and Hugging Face forums. Code Llama benefits from a strong open-source community, while GPT-4 Turbo is backed by extensive official documentation and community-driven content. For teams that rely on robust support, GPT-4 Turbo leads in maturity, but DeepSeek-Coder’s momentum is building rapidly.

Licensing and Accessibility

Open-Source vs. Proprietary Licensing

Explore the core distinction between openly available models like DeepSeek-Coder and Code Llama, and proprietary models such as GPT-4 Turbo. Discuss what open-source means for developers in terms of transparency, customization, and control.

Cost Considerations

Break down the financial implications of using each model. Highlight the cost-efficiency of open-source models versus the subscription-based or usage-based pricing models of commercial platforms like OpenAI.

Commercial Usage Rights and Restrictions

Examine how each model can be used in commercial products or services. Clarify any limitations, licensing terms, or compliance requirements that developers and businesses should be aware of before deployment.

Use Cases & Recommendations: Choosing the Right Code Model for Your Needs

Ideal Scenarios for Each Model

- DeepSeek-Coder: Best suited for open-source projects, small teams, or individual developers who prioritize flexibility and customization. It excels in multilingual support and adaptable code generation.

- Code Llama: Ideal for developers focused on code completion and debugging across a wide range of languages. Its ease of integration makes it a strong choice for both enterprise environments and hobbyists.

- GPT-4 Turbo: The go-to solution for high-level reasoning tasks, detailed code explanations, and large-scale enterprise use, especially when working with complex systems or needing robust AI tools integrated with development platforms.

Which Model to Choose Based on Team Size, Budget, and Goals

- Small Teams & Budgets: DeepSeek-Coder’s open-source nature makes it a cost-effective choice for startups or small teams with limited resources but strong development needs.

- Medium to Large Teams: Code Llama is a solid middle-ground, offering powerful features for both experienced developers and larger teams requiring high-performance models for a variety of coding tasks.

- Enterprise-Level & Premium Solutions: GPT-4 Turbo, though premium, is unmatched in its versatility and integration with enterprise tools, making it the ideal option for large corporations looking for robust AI-assisted development workflows.

Conclusion

Conclusion, while DeepSeek-Coder offers strong performance as an open-source, multilingual code generation tool, it faces challenges in ecosystem maturity compared to more established models like Code Llama and GPT-4 Turbo. Code Llama excels in code completion, and GPT-4 Turbo shines with advanced reasoning capabilities and integrations. Each model has its own strengths, making the choice dependent on specific project needs whether that’s flexibility, integration, or advanced features. Developers should carefully assess factors like budget, complexity, and long-term scalability when selecting the most suitable tool for their workflow.